Computational Information Design

8 Closing

8.1

the design of information through computational means

This thesis presents how several typically disciplines are combined to focus on a process for understanding data. Through the combination of these fields, a single, unique discipline of Computational Information Design is formed. A key element of this dissertation is to make the process as accessible to as large an audience as possible, both through the cursory introductions to the multiple disciplines in the “Process” chapter, and online via the programming tool described in chapter six. The tool has been developed and tested by more than ten thousand users, a proven practical use that demonstrates the usability of the software and ability for others to create with it. The examples in the second and fourth chapter serve as a means to demonstrate that the process is grounded in actual implementation rather than simply a rhetorical exercise.

8.2

applications

A near limitless number of applications exist for data that needs to be understood. The most interesting problems are those that seem too “complicated” because the amount of data means that it’s nearly impossible to get a broad perspective on how it works.

This might be applied to understanding financial markets or simply the tax code. Or companies trying to track purchases, distribution, and delivery via rfid tags – more data collection without a means to understand it. Network monitoring remains an open problem: is there an intruder? has a machine been hacked or a password stolen? In software, questions of how ever more complex software, whether an operating system or a large scale project, will be necessary. Looking at math and statistical modeling, what does a Hidden Markov Model look like? How do we know a neural network is doing what it’s supposed to? Security and intelligence services have an obvious data problem, how to sift through the million bits of information received daily for legitimate threats, without doing so in a manner that infringes on the civil liberties of individuals.

8.3

education

Dissemination is a pragmatic issue of simply making Computational Information Design known to a wider audience, through publication, producing more examples, and presentation. This is limited however, by this author’s output.

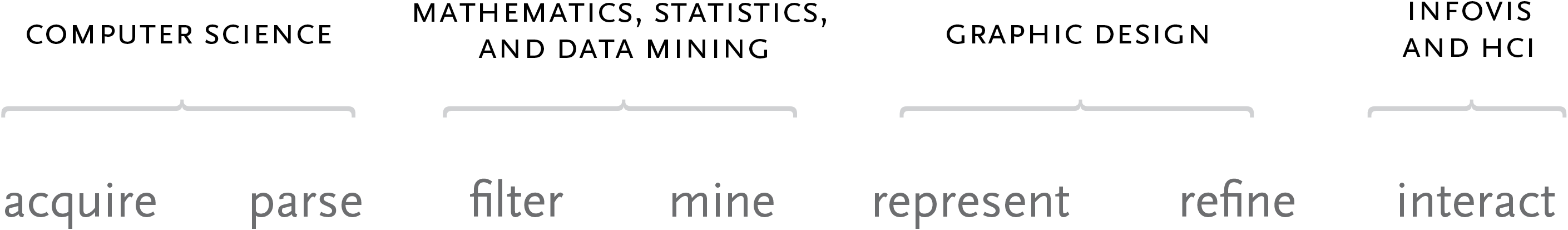

An alternative is to consider how this line of study might be taken up in a school setting, where practitioners of the individual fields (computer science, statistics, graphic design, and information visualization) are brought together to teach parts of a curriculum that cover all aspects of data handling.

It is not uncommon for attempts to be made at such cross-disciplinary curriculum. Some architecture and design schools have core courses that include computer programming alongside more traditional basics like drawing. The problem with these programs is that the focus is on a well-rounded student, rather than the relevant content of what is enabled by the “well-roundedness” to which they aspire. The result is that the programming skills (or others deemed irrelevant) are forgotten not long after (even before?) completion of the course.

The issue is understanding the relevance, and practicing the field. Perhaps by focussing on the goal of understanding data, the individual fields might be given more relevance. The focus being on what each area offers to the goal, in addition to the mind-stretching that comes naturally from learning a topic previously out of one’s reach. Some of this author’s least favorite high school courses were in Geometry and Linear Algebra, up until graduate school when he developed an intuitive sense of what concepts like sine and cosine meant, and they became everyday components of the work seen in this thesis. A previous belief that Computer Graphics was about making awful 3d landscapes and virtual reality creatures was traded for an understanding of what software-based graphics brought to the field of Information Design.

8.4

tools

Another challenge of this work is to improve the tools. One side of this challenge is to make simpler tools, making them faster for prototyping than writing software code, as well as usable by more people. The other side is that they must be more powerful, enabling more sophisticated work. Software tools for design (like Illustrator, PageMaker, and Fontographer) led a revolution in layout, design, and typography by removing the burden of rigid printing and typesetting media.

Ideally, the process of Computational Information Design would be accompanied by a still more advanced toolset that supports working with issues of data, programming and aesthetics in the same environment. The logical ideal for such a tool set would be an authoring environment for data representation—combining the elements of a data-rich application like Microsoft Excel, with the visual control of design software like Adobe Illustrator, and an authoring environment like MatLab. The development of this software environment is outside the scope of this thesis because a disproportionate amount of time would be spent on end-user aspects of software engineering, rather than using it to develop relevant projects. Such a toolset would be less code oriented for the aspects of visual construction, employing direct manipulation tools as in Illustrator. Yet the on-screen instantiations of data would, rather than being fixed points in space, be assigned to values from a table or a database.

A step further would look at how to make the visualization of data more like a kind of “kinetic information sculpture,” or a lathe for data. The text medium of programming provides great flexibility but is sensory deprivation compared to the traditional tools of artists that employ a greater range of senses. What if the design of information were more like glass blowing? As interfaces for physical computing become more prevalent, what will this mean to how data is handled? Having mapped out the field of Computational Information Design, all the interesting work lies in helping it mature.