I’ve been trying to organize my thoughts about the iPad and the direction that Apple is taking computing along with it. It’s really an extension of the way they look at the iPhone, which I found unsettling at the time but with the iPad, we’re all finally coming around to the idea that they really, really mean it.

I want to build software for this thing. I’m really excited about the idea of a touch-screen computing platform that’s available for general use from a known brand who has successfully marketed unfamiliar devices to a wide audience. (Compare this to, say, Microsoft’s Tablet PC push that began in the mid-2000s and is… nowhere?)

It represents an incredible opportunity, but I can’t get excited about it because of Apple’s attempt to control who creates for it, and what they can create for it. Their policy of being the sole distributor of applications, and even worse, requiring approval on all applications, is insulting to developers. Even the people who have created Mac software for years are being told they can no longer be trusted.

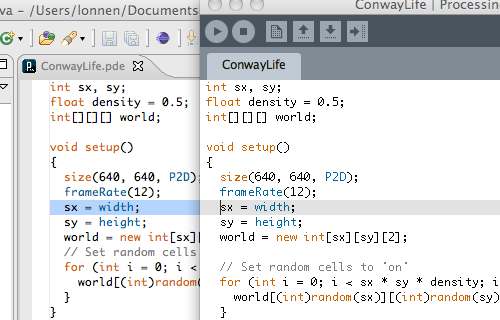

I find it offensive on a very basic level, because I know that if such restrictions were in place when I was first learning to write software — mostly on Apple machines, no less — I would not have a career in the field. Or if we had to pay regular fees to become a developer, use only Apple-provided tools, and could release only approved software through an Apple store, things like the Processing project would not have happened. I can definitively say that any success that I’ve had has come from the ability to create what I want, the way that I want, and to be able to distribute it as I see fit, usually over the internet.

As background, I’m writing this as a long-time Apple user who started with an Apple ][+ and later the original 128K Mac. A couple months ago, Apple even profiled my work here.

You’ll shoot your eye out, kid!

There’s simply no reason to prevent people from installing anything they want on the iPad. The same goes for the iPhone. When the iPhone appeared, Steve Jobs made a ridiculous claim that a rogue application could “take down the network.” That’s an insult to common sense (if it were true, then the networks have a serious, serious design flaw). It’s also an insult on our intelligence, except for the Apple fans who repeat this ridiculous statement.

But even if you believed the Bruce Willis movie version of how mobile networks are set up, it simply does not hold true for the iPad (and the iPod Touch before it). The $499 iPad that has no data network hardware is not in danger of “taking down” anyone’s cell network, but applications will still be required to go through the app store and therefore, its approval process.

The irony is that the original Mac was almost a failure because of Jobs’ insistence at the time about how closed the machine must be. I recall reading about how the original Macintosh was almost nearly a failure, were it not for engineers who developed AppleTalk networking in spite of Steve Jobs’ insistence of keeping the original Macintosh as an island unto itself. Networking helped make the “Macintosh Office” possible by connecting a series of Macs to the laser printer (introduced at the same time), and so followed the desktop publishing revolution of the mid-80s. Until that point, the 128K Macintosh was largely a $2500 novelty.

For the amazing number of lessons that Jobs seems to have learned in his many years in technology, his insistence of control is to me a glaring omission. It’s sad that Jobs groks the idea of computers designed for humans, but then consistently slides into unnecessary lockdown restrictions. It’s an all-too-human failing of wanting too much control.

Only available on the Crapp Store!

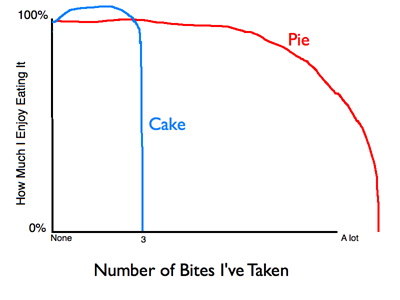

For all the control that Apple’s taken over the content on the App Store, it hasn’t prevented the garbage. Applications for jiggling boobs or shaking babies have somehow first made it through the same process that delayed the release or update of many other developers’ applications for weeks. Some have been removed, but only after an online uproar of keyboards and pitchforks. The same approval process that OKs flashlight apps by the dozen and fart apps.

Obvious instances aside, the line of “appropriate” will always be subjective. The line changed last week when Apple decided to remove 5,000 “overtly sexual” applications, which might make sense, but is instead hypocritical when they don’t apply the same criteria to established names like Playboy.

Somebody’s forgetting the historical mess of “I know it when I see it.” It’s an un-answerable dilemma (or is that an enigma?), so why place yourself in a situation of being arbiter?

Another banned application was a version of Dope Wars, a game that dates back to the mid-80s. Inappropriate? Maybe. A problem? Only if children have been turning to lives of crime since its early days as an MS-DOS console program, or on their programmable TI calculators. Perhaps the faux-realistic interface style of the iPhone OS tipped the scales.

The problem is that fundamentally, it’s just never going to be possible to prevent the garbage. If you want to have a boutique, like the Apple retail stores, where you can buy a specially selected subset of merchandise from third parties, then great. But instead, we’ve conflated wanting to have that kind of retail control (a smart idea) with the only conduit by which software can be sold for the platform (an already flawed idea).

Your toaster doesn’t need a hierarchical file system

Anyone who has spent five minutes helping someone with their computer will know that the overwhelming majority don’t need full access to the file system, and that it’s a no-brainer to begin hiding as much of it as possible. The idea of the ipad as appliance (and the iPhone before it) is an obvious, much needed step in the user interface of computing devices.

(Of course, the hobbyist in me doesn’t want that version, since I still want access to everything, but most people I know who don’t spend all their time geeking out on the computer have no use for the confusion. I’m happy to separate those parts.)

And frankly, it’s an obvious direction, and it’s actually much closer to very early versions of Mac OS — the original System and Finder — than it is with OS X. Mac OS X is as complicated as Windows. My father, also an early Mac user, began using PCs as Apple fell apart in the late 90s. He hasn’t returned to the Mac largely because of the learning curve for OS X, which is no longer head and shoulders above Windows in terms of its ease of use. Surely the overall UI is better, clearer, and more thoughtfully put together. But the reason to switch nowadays is less to do with the UI, and more to do with the way that one can lose control of their Windows machines due to the garbage installed by PC vendors, the required virus scanning software, the malware scanning software, and all the malware that gets through in spite of it all.

The amazing Steven Frank, co-founder of Panic, puts things in terms of Old World and New World computing. But I believe he’s mixing the issue of the device feeling (and working) in a more appliance-like fashion with the issue of who controls what goes on the device, and how it’s distributed to the device. I’m comfortable with the idea that we don’t need access to the file system, and it doesn’t need to feel like a “computer.” I’m not comfortable with people being prevented by a licensing agreement, or worse, sued, for hacking the device to work that way.

It Just Works, except when It Doesn’t

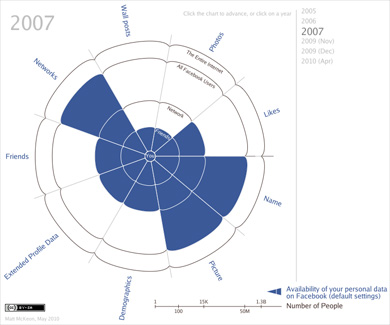

The “it just works” mantra often credited to Apple is — to borrow the careful elocution of Steve Jobs — “bullshit.” To use an example, if things “just worked” then I’d be able to copy music from my iPod back to my laptop, or from one machine that I own to another. If I’ve paid for that music (whether it’s DRM-free or even if I made the MP3 myself), there’s simply no reason that I should be restricted from copying this way. Instead we have the assumption that I’m doing something illegal built into the software, and preventing obvious use.

Of course, I assume that as implemented, this feature is something that was “required” by the music industry. But to my knowledge, there’s simply no proof of that. No such statement has been made, and more likely, it’s easier for Apple fans to use the “evil music industry” or “evil RIAA” as easier to blame. This thinking avoids noticing that Apple has also demanded similar restrictions for others’ projects, in a case where they actually have control over such matters.

Bottom line, when trying to save the music collection of a family member whose laptop has crashed is a great time, and it’s only made better by taking the time to dig up a piece of freeware that will let me copy the music from their iPod back to their now blank machine. The music that they spent so much money on at the iTunes Store.

Like “don’t be evil,” the “it just works” phrase applies, or it doesn’t. Let’s not keep repeating the mantra and conveniently ignoring the times when the opposite is true.

It’s been a long couple of months, and it’s only getting longer

One of the dumbest things that I’ve seen in the past two months since the iPad announcement is articles that write about the device comparing it to other computers, and how it doesn’t have feature x, y, or z. That’s silly to me because it’s not a general purpose computer like we’re used to. And yes, I’m fully aware of the irony of that statement if you take it too literally. I am in fact complaining about what’s missing from the iPad (and iPhone), though it’s about things that have been removed or disallowed for reasons of control, and don’t actually improve the experience of using the device. Now stop thinking so literally.

The thing that will be interesting about the iPad is the experience of using it — something that nobody has had except for the folks at Apple — and as is always the case when dealing with a different type of interface, you’re always going to be wrong.

So what is it? I’m glad you asked…

Who is this for?

As Teri likes to point out, it’s also important to note the appeal of this device to a different audience — our parents. They need something like an iPhone, with a bigger screen, that allows them to browse the internet and read lots of email and answer a few. (No word yet on whether the iPad will have the ability to forward YouTube videos, chain e-mails, or internet jokes.) For them, “it’s just a big iPhone” is a selling point. The point is not that the iPad is for old people, the point is that it’s a new device category that will find its way into interesting niches that we can’t ascertain until we play with the thing.

Any time you have a new device, such as this one, it also doesn’t make a lot of sense. It simply doesn’t fit with anything that we’re currently used to. So we have a lot of lazy tech writers who go on about how it’s under-featured (it’s a small computer! it’s a big phone!) or that it doesn’t make sense in the lineup. This is a combination of a lack of creativity (rather than tearing the thing down, think about how it might be used!) and perhaps the interest of filling column inches in spite of the fact that none of these people has used the device, so we simply don’t know. It’s part of what’s so dumb about pre-game shows for sports. What could be more boring than a bunch of people arguing about what might happen? The only thing that’s interesting about the game is what does happen (and how it happens). I know you’ve got to write something, but man, it’s gonna been a long couple weeks until the device arrives.

It’s Perfect! I love it like it is.

There’s also talk about the potential disappearance of extensions or plug-in applications. While Mac OS extensions (of OS 9 and earlier) were a significant reason for crashes on older machines, they also contributed to the success of the platform. Those extensions wouldn’t be installed if there weren’t a reason, and the fact is, they were valuable enough that it was the occasional sobs for an hour of lost work after a system crash to have them present.

I think the anti-extension arguments come from people who are imagining the ridiculous number of extensions on others’ machines, but disregarding the fact that they badly needed something like Suitcase to handle the number of fonts on their system. As time goes on, people will want to do a wider range of things with the iPhone/iPad OS too. The original Finder and System had a version 3 too (actually they skipped 3.0, but nevermind that), just like the iPhone. Go check that out, and now compare it to OS X. The iPhone OS will get crapped up soon enough. Just as installing more than 2-3 pages of apps on the iPhone breaks down the UI (using search is not the answer — that’s the equivalent of giving up in UI design), I’m curious to see what the oft-rumored multitasking support in iPhone OS 4 will do for things.

And besides, without things like Windowshade, what UI elements could be licensed (or stolen) and incorporated into the OS. Ahem.

I’d never bet against people who tinker, and neither should Apple.

I haven’t even covered issues from the hardware side, in spite of having grown up taking apart electronics and in awe of the Heathkit stereo my dad built. But it’s the sort of thing that disturbs our friends at MAKE, and others have written about similar issues. Peter Kirn has more on just how bad the device is in terms of openness. One of the most egregious hardware problems is the device’s connection to the outside world is a proprietary port, access to which has to be licensed from Apple. This isn’t just a departure from the Apple ][ days of having actual digital and analog ports on the back (it was like an Arduino! but slower…) it’s not even something more standard like USB.

But why would you artificially keep this audience away? To make a couple extra percent on licensing fees? How sustainable is that? Sure it’s a tiny fraction of users, but it’s some of the most important — the people who are going to do new and interesting things with your platform, and take it in new directions. Just like the engineers who sneaked networking into the original Macintosh, or who built entire industries around extending the Apple ][ to do new things. Aside from the schools, these were the people who kept that hardware relevant long enough for Apple to screw up the Lisa and Mac projects for a few years while they got their bearings.

Enough…

I am not a futurist, but at the end of it all, I’m pretty disappointed by where things seem to be heading. I spend a lot of effort on making things, and trying to get others to make things, and having someone in charge of what I make, and how I distribute it is incredibly grating. And the fact that they’re having this much success with it is saddening.

It may even just work.

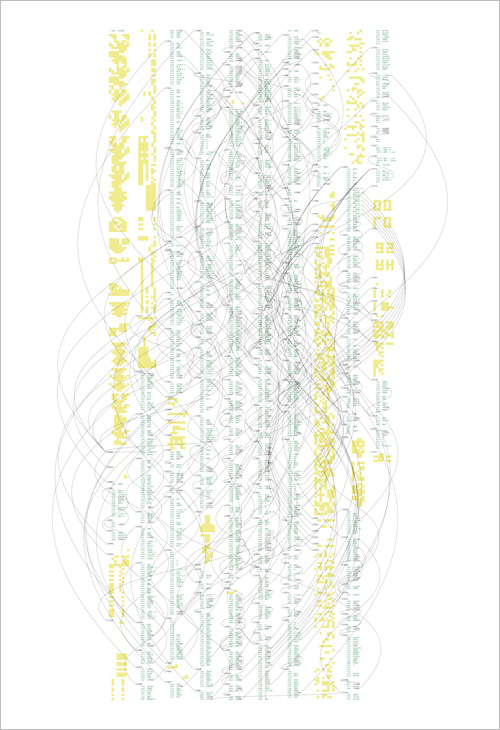

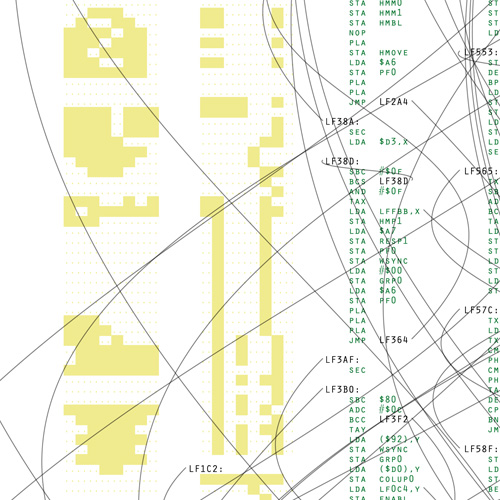

I’ve just posted revision 0176 of Processing, a pre-release of what will become version 1.1 or maybe 1.5, depending on how long we bake this one before releasing the final. A list of changes can be found

I’ve just posted revision 0176 of Processing, a pre-release of what will become version 1.1 or maybe 1.5, depending on how long we bake this one before releasing the final. A list of changes can be found