Whups! That’s not the right one. Here we go:

Today, on November 24, 2008, we launch the 1.0 version of the Processing software. Processing is a programming language, development environment, and online community that since 2001 has promoted software literacy within the visual arts. Initially created to serve as a software sketchbook and to teach fundamentals of computer programming within a visual context, Processing quickly developed into a tool for creating finished professional work as well.

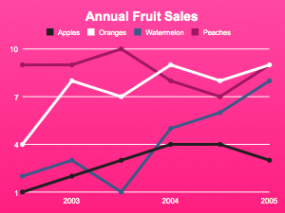

Processing is a free, open source alternative to proprietary software tools with expensive licenses, making it accessible to schools and individual students. Its open source status encourages the community participation and collaboration that is vital to Processing’s growth. Contributors share programs, contribute code, answer questions in the discussion forum, and build libraries to extend the possibilities of the software. The Processing community has written over seventy libraries to facilitate computer vision, data visualization, music, networking, and electronics.

Students at hundreds of schools around the world use Processing for classes ranging from middle school math education to undergraduate programming courses to graduate fine arts studios.

- At New York University’s graduate ITP program, Processing is taught alongside its sister project Arduino and PHP as part of the foundation course for 100 incoming students each year.

- At UCLA, undergraduates in the Design | Media Arts program use Processing to learn the concepts and skills needed to imagine the next generation of web sites and video games.

- At Lincoln Public Schools in Nebraska and the Phoenix Country Day School in Arizona, middle school teachers are experimenting with Processing to supplement traditional algebra and geometry classes.

Tens of thousands of companies, artists, designers, architects, and researchers use Processing to create an incredibly diverse range of projects.

- Design firms such as Motion Theory provide motion graphics created with Processing for the TV commercials of companies like Nike, Budweiser, and Hewlett-Packard.

- Bands such as R.E.M., Radiohead, and Modest Mouse have featured animation created with Processing in their music videos.

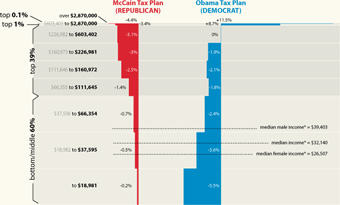

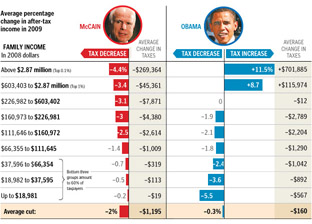

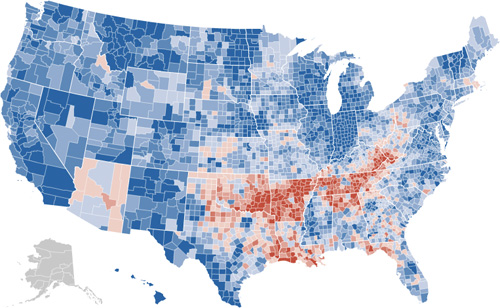

- Publications such as the journal Nature, the New York Times, Seed, and Communications of the ACM have commissioned information graphics created with Processing.

- The artist group HeHe used Processing to produce their award-winning Nuage Vert installation, a large-scale public visualization of pollution levels in Helsinki.

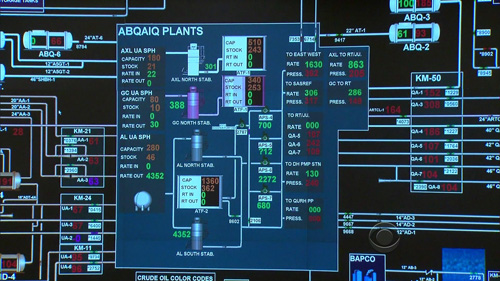

- The University of Washington’s Applied Physics Lab used Processing to create a visualization of a coastal marine ecosystem as a part of the NSF RISE project.

- The Armstrong Institute for Interactive Media Studies at Miami University uses Processing to build visualization tools and analyze text for digital humanities research.

The Processing software runs on the Mac, Windows, and GNU/Linux platforms. With the click of a button, it exports applets for the Web or standalone applications for Mac, Windows, and GNU/Linux. Graphics from Processing programs may also be exported as PDF, DXF, or TIFF files and many other file formats. Future Processing releases will focus on faster 3D graphics, better video playback and capture, and enhancing the development environment. Some experimental versions of Processing have been adapted to other languages such as JavaScript, ActionScript, Ruby, Python, and Scala; other adaptations bring Processing to platforms like the OpenMoko, iPhone, and OLPC XO-1.

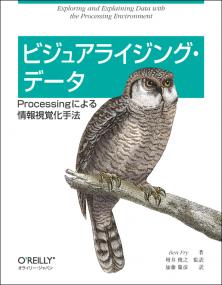

Processing was founded by Ben Fry and Casey Reas in 2001 while both were John Maeda’s students at the MIT Media Lab. Further development has taken place at the Interaction Design Institute Ivrea, Carnegie Mellon University, and the UCLA, where Reas is chair of the Department of Design | Media Arts. Miami University, Oblong Industries, and the Rockefeller Foundation have generously contributed funding to the project.

The Cooper-Hewitt National Design Museum (a Smithsonian Institution) included Processing in its National Design Triennial. Works created with Processing were featured prominently in the Design and the Elastic Mind show at the Museum of Modern Art. Numerous design magazines, including Print, Eye, and Creativity, have highlighted the software.

For their work on Processing, Fry and Reas received the 2008 Muriel Cooper Prize from the Design Management Institute. The Processing community was awarded the 2005 Prix Ars Electronica Golden Nica award and the 2005 Interactive Design Prize from the Tokyo Type Director’s Club.

The Processing website includes tutorials, exhibitions, interviews, a complete reference, and hundreds of software examples. The Discourse forum hosts continuous community discussions and dialog with the developers.

Featuring “38 projects and more than 730,000 records,” the Los Angeles Times now has a

Featuring “38 projects and more than 730,000 records,” the Los Angeles Times now has a  Casey

Casey

My friends down the street at

My friends down the street at