Is Processing a Language?

This question is covered in the FAQ on Processing.org, but still tends to reappear on the board every few months (most recently here). Someone once described Processing syntax as a dialect of Java, which sounds about right to me. It’s syntax that we’ve added on top of Java to make things a little easier for a particular work domain (roughly, making visual things). There’s also a programming environment that significantly simplifies what’s found in traditional IDEs. Plus there’s a core API set (and a handful of core libraries) that we’ve built to support this type of work. If we did these in isolation, none would really stick out:

- The language changes are pretty minimal. The big difference is probably how they integrate with the IDE that’s built around the idea of sitting down and quickly writing code (what we call sketching). We don’t require users to first learn class definitions or even method declarations before they can show something on the screen, which helps avoid some of the initial head-scratching that comes from trying to explain “public class” or “void” or beginning programmers. For more advanced coders, it helps Java feel a bit more like scripting. I use a lot of Perl for various tasks, and I wanted to replicate the way you can write 5-10 lines of Perl (or Python, or Ruby, or whatever) and get something done. In Java, you often need double that number of lines just to set up your class definitions and a thread.

- The API set is a Java API. It can be used with traditional Java IDEs (Eclipse, Netbeans, whatever) and a Processing component can be embedded into other applications. But without the rest of it (the syntax and IDE), Processing (API or otherwise) it would not be as widely used as it is today. The API grew out of Casey and I’s work, and our like/dislike of various approaches used by libraries that we’ve used: Postscript, QuickDraw, OpenGL, Java AWT, even Applesoft BASIC. Can we do OpenGL but still have it feel as simple as writing graphics code on the Apple ][? Can we simplify current graphics approaches so that they at least feel simpler like the original QuickDraw on the Mac?

- The IDE is designed to make Java-style programming less wretched. Check out the Integration discussion board to see just how un-fun it is to figure out how the Java CLASSPATH and java.library.path work, or how to embed AWT and Swing components. These frustrations and complications sometimes are even filed as bugs in the Processing bugs database by users who have apparently become spoiled by not having to worry about such things.

If pressed, perhaps the language itself is probably the easiest to let go of—witness the Python, Ruby and now JavaScript versions of the API, or the C++ version that I use for personal work (when doing increasingly rare C++ projects). And lots of people build Processing projects without the preprocessor and PDE.

In some cases, we’ve even been accused of not being clear that it’s “just Java,” or even that Processing is Java with a trendy name. Complaining is easier than reading, so there’s not much we can do for people who don’t glance at the FAQ before writing their unhappy screeds. And with the stresses of the modern world, people need to relieve themselves of their angst somehow. (On the other hand, if you’ve met either of us, you’ll know that Casey and I are very trendy people, having grown up in the farmlands of Ohio and Michigan.)

However, we don’t print “Java” on every page of Processing.org for a very specific reason: knowing it’s Java behind the scenes doesn’t actually help our audience. In fact, it usually causes more trouble than not because people expect it to behave exactly like Java. We’ve had a number of people who copy and pasted code from the Java Tutorial into the PDE, and are confused when it doesn’t work.

(Edit – In writing this, I don’t want to understate the importance of Java, especially in the early stages of the Processing project. It goes without saying that we owe a great deal to Sun for developing, distributing, and championing Java. It was, and is, the best language/environment on which to base the project. More about the choice of language can be found in the FAQ.)

But for as much trouble as the preprocessor and language component of Processing is for us to develop (or as irrelevant it might seem to programmers who already code in Java), we’re still not willing to give that up—damned if we’re gonna make students learn how to write a method declaration and “public class Blah extends PApplet” before they can get something to show up on the screen.

I think the question is a bit like the general obsession of people trying to define Apple as a hardware or software company. They don’t do either—they do both. They’re one of the few to figure out that the distinction actually gets in the way of delivering good products.

Now, whether we’re delivering a good product is certainly questionable—the analogy with Apple may, uh, end there.

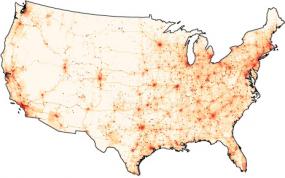

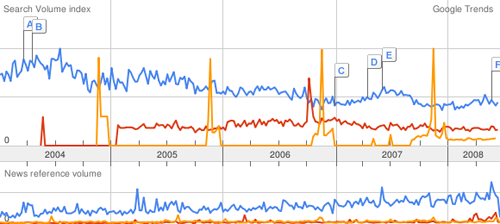

Maximillian Dornseif has adapted

Maximillian Dornseif has adapted  Derek Jeter vs. Objective Reality

Derek Jeter vs. Objective Reality

Indiana Jones and the Fonts on the Maps

Indiana Jones and the Fonts on the Maps